Hello HJ1AN,

There should not really be a tv program about AirAsia yet, because the report is still due. It was promised for august, but well, we'll have to wait a bit longer.

Kind regards, Vincent

Board index ‹ Other ‹ Hangar talk ‹ Real aviation

Airbus madness

Forum rules

Please refrain from discussing politics.

Please refrain from discussing politics.

Re: Airbus madness

Hello Jwocky,

You said:

For me it is not about saving the plane, but about doing the correct inputs for having any chance to save the plane. Doing the opposite is a definite fail.

Kind regards, Vincent

You said:

I don't see how any of them could have saved that plane at this point. But hey, who knows?

For me it is not about saving the plane, but about doing the correct inputs for having any chance to save the plane. Doing the opposite is a definite fail.

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

You ask for evidence, but as a layman i have to get it from other sources like:

- Accident reports and reading in there more frequent about pilots not being able to perform basic flying tasks.

- Official reports (like FAA) with serious concerns about pilot's flying abilities.

- Pilot accounts that show how bad the situation really can be.

Why disqualify all these people? Are the FAA, NTSB and the likes wrong, and the pilots lying? And do you know it all better than them?

Ah well, let's delve into some actual numbers and think for ourselves. Do we agree that the trend for automation in cockpits has been steadily increasing from, say, 1980 to today? I think that's a reasonable proposition to start with.

This is the annual passenger volume carried by airplanes wordwide - trend goes upward:

This is the annual passenger volume at a particular airport (Barcelona):

We see that the upward trend isn't actually solely by adding more flights in so far unserviced regions, but that the passenger throughput through particular airports is also steeply on the rise.

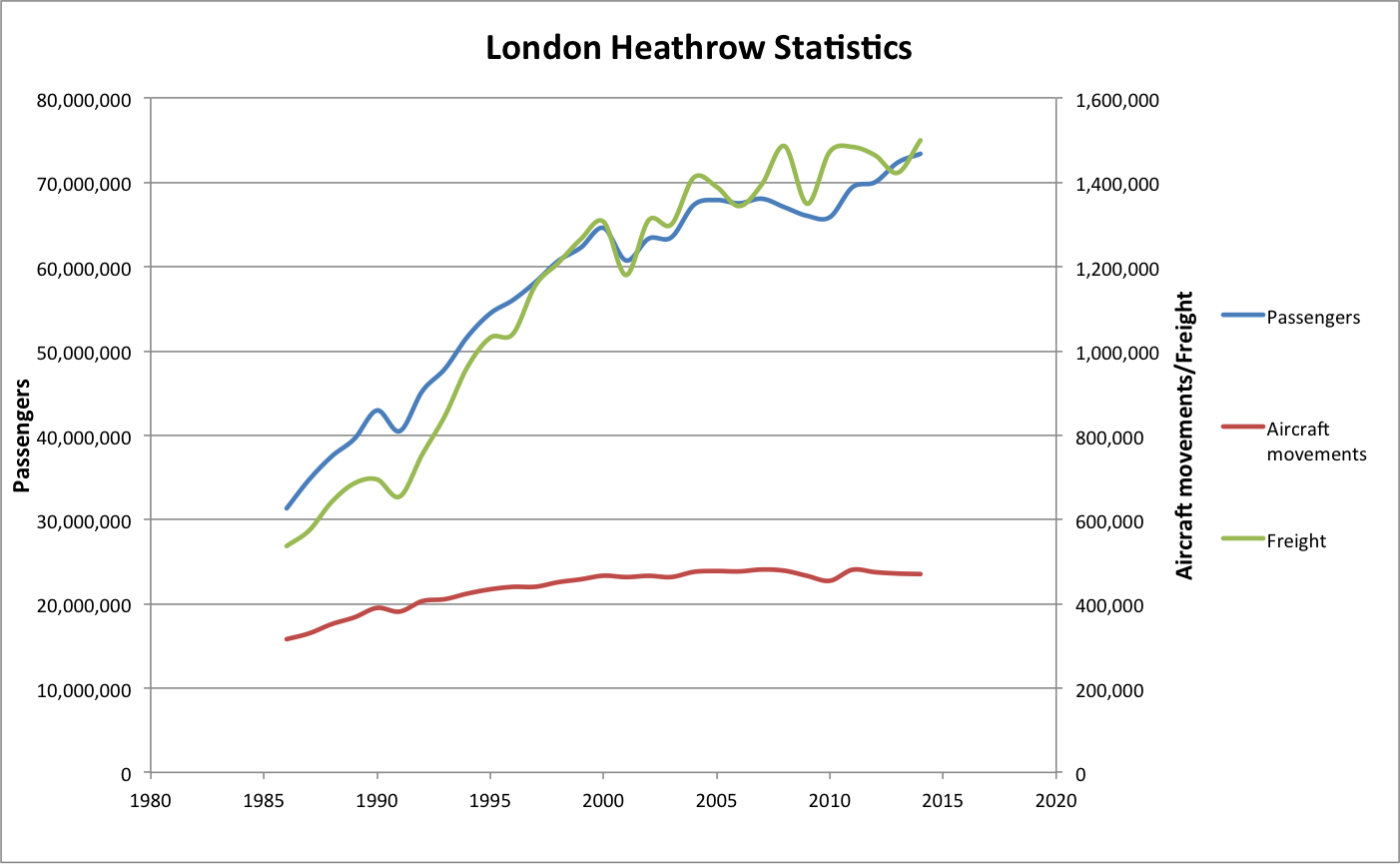

This is London Heathrow:

You can see that even at such a busy airport, not only passenger numbers grow (larger planes) but also there's a trend to more aircraft movements year by year.

(add another 100 plots of the same sort here)

Year by year, more passengers are moved - that's a fact. Year by year, more planes take off and land in busy hubs - another fact. We started from the premise that since 1980 automation has been increasing.

Combining these three things allows us to form an expectation:

If automation and other advances in technology changed nothing in the safety of flights, we'd expect accident rates to (on average) scale with aircraft movements (i.e. increase) and fatalities to scale with passenger movements (i.e. also increase).

If automation made flying less safe, we'd expect accident rates to grow more steeply than movements and fatalities to grow more steeply than passenger volume.

Here's what these numbers actually do:

Accidents and incidents:

Fatalities:

That's right - they go down. Something has made air traffic statistically a lot safer since 1980 - your chance of dying in an accident is now about four times less than it was back then.

See, statistics is tricky. You can maybe find a trend in the accident reports that accidents are more likely caused by automation problems these days - but you'll miss the fact that the rate of accidents has dramatically declined. You'll never see the accidents prevented by automation unless you also look at the total accident rate.

In other words, the above numbers don't support your scenario at all.

It's like with vaccination - you can find impressive lists of vaccination side effects, you can estimate that 1 in 10.000 (invented number to make a point) of vaccinated people will die of complications - but it'll make you miss the fact that the vaccination might save the life of 500 of these 10.000 people - you'll never know unless you compare pre-vaccination with post-vaccination mortality figures.

Are the FAA, NTSB and the likes wrong, and the pilots lying?

I happen to know a fun figure: 70% of car drivers believe that they are among the top 10% of drivers rated by ability. Which is to say, most drivers believe they're much better than the rest. I think it possible that most pilots believe they're much better than their colleagues as well. As the first figure explains why everyone seems to meet incompetent drivers all the time, something similar could explain why pilots run into incompetent colleagues all the time.

If the FAA had a water-tight case, there'd be no problem getting support for a law/regulations which mandates Airbus to change strategy - if only by preventing only AP-controlled planes to enter US airspace. The fact that this hasn't happened argues strongly that the FAA does not have a water-tight case, concerns perhaps (like you and me) but no solid numbers to back them up.

And do you know it all better than them?

That's not my claim. I don't know what level of automation makes a flight safer and what doesn't. What I do know exceedingly well is how to read statistics and what pitfalls there are, how to do risk computations and that kind of thing. And what I also know is how to check data and hypotheses for consistency.

Again - what you have is not convincing when confronted with any numbers I could pull up. The global trends say otherwise. The introduction of more automation and other technical advances correlate with air traffic becoming a factor of about four safer.

Edit: Crash rates by aircraft type - discarding the ones with insufficient statistics, I can't see a clear trend between Boeing and Airbus either.

(Note how the Concorde leads the list as the least safe plane - if that one crash wouldn't have happened, it'd be the safest - insufficient statistics).

Last edited by Thorsten on Thu Sep 03, 2015 6:55 am, edited 1 time in total.

- Thorsten

- Posts: 12490

- Joined: Mon Nov 02, 2009 9:33 am

Re: Airbus madness

KL-666 wrote in Mon Aug 31, 2015 6:26 am:This is the worst image i have ever seen impersonating a pilot. Clearly this sleepy airbus person has no clue how to fly a plane. That is a general problem with airbus persons impersonating pilots.

That's pretty uninformed and disrespectful - especially given that there are actual Airbus pilots (RL, ATPL holders, type-rated) among the users of this forum - and you are really unlikely to get the kind of feedback that you need/want by making such statements.

Automation is a key part of our lives - no matter if you are driving a car or using a cell phone. For a driving license, you no longer need to know how an engine or transmission actually works. Which also lowers the barrier to entry for new drivers.

Please don't send support requests by PM, instead post your questions on the forum so that all users can contribute and benefit

Thanks & all the best,

Hooray

Help write next month's newsletter !

pui2canvas | MapStructure | Canvas Development | Programming resources

Thanks & all the best,

Hooray

Help write next month's newsletter !

pui2canvas | MapStructure | Canvas Development | Programming resources

- Hooray

- Posts: 12707

- Joined: Tue Mar 25, 2008 9:40 am

- Pronouns: THOU

Re: Airbus madness

You are right Hooray, i have a bad habit of starting discussions way too unsubtle. Sorry about that, need to work on it.

Kind regards, Vincent

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

Hello Thorsten,

I think we agree about everything you said in your latest post. Generally speaking: Automation is good for safety. Also there is not much wrong with the aircraft, so the FAA can and should not do much in terms of banning craft.

The problem arises in the few cases when the automation stops. Then we increasingly see pilots having problems with very basic aircraft handling. Contributing to this is the permanent use of the automation, so that little or no experience can be built up. I think that solving this issue is a big win in preventing many of the last few accidents that remain.

Some will say that we need 100% automation for that. That is fine with me. But we are just not there yet. As long as an autopilot can still turn itself off, we need that person capable of flying up front. Not only in America and Europe, where a lot of hand flying is still practiced, but everywhere in the world.

My issue with Airbus is that they are way too early with promoting that automation solves everything, where it clearly does not in all cases (yet). This mindset is also reflected in the way they implement the controls. Namely very different from direct law. If i remember well Airbus has advised until recently that only training in normal law was necessary. Getting into the other laws will never happen.

In this age of incomplete automation i find it wiser to keep the way the controls behave very close to what they will be when an emergency arises. Then the practice will be there when needed. There is just one more problem, the practice should be trained, not only in the sim (too few hours) but also on the line. There Airbus will have a hard time telling airlines that landings should be done manual and in direct law regularly.

Some more about evidence, i do not have the time (and pleasure) to dig up every report i read, and reread them to point to the paragraphs where the issues with pilot capabilities are dealt with. You did a good job yourself with the Asiana report, so you could do some more if you like. The next report I'll read is AirAsia, which should be out some time soon. Just trust me that i distrust journalists and always look for the report they talk about.

Kind regards, Vincent

I think we agree about everything you said in your latest post. Generally speaking: Automation is good for safety. Also there is not much wrong with the aircraft, so the FAA can and should not do much in terms of banning craft.

The problem arises in the few cases when the automation stops. Then we increasingly see pilots having problems with very basic aircraft handling. Contributing to this is the permanent use of the automation, so that little or no experience can be built up. I think that solving this issue is a big win in preventing many of the last few accidents that remain.

Some will say that we need 100% automation for that. That is fine with me. But we are just not there yet. As long as an autopilot can still turn itself off, we need that person capable of flying up front. Not only in America and Europe, where a lot of hand flying is still practiced, but everywhere in the world.

My issue with Airbus is that they are way too early with promoting that automation solves everything, where it clearly does not in all cases (yet). This mindset is also reflected in the way they implement the controls. Namely very different from direct law. If i remember well Airbus has advised until recently that only training in normal law was necessary. Getting into the other laws will never happen.

In this age of incomplete automation i find it wiser to keep the way the controls behave very close to what they will be when an emergency arises. Then the practice will be there when needed. There is just one more problem, the practice should be trained, not only in the sim (too few hours) but also on the line. There Airbus will have a hard time telling airlines that landings should be done manual and in direct law regularly.

Some more about evidence, i do not have the time (and pleasure) to dig up every report i read, and reread them to point to the paragraphs where the issues with pilot capabilities are dealt with. You did a good job yourself with the Asiana report, so you could do some more if you like. The next report I'll read is AirAsia, which should be out some time soon. Just trust me that i distrust journalists and always look for the report they talk about.

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

My issue with Airbus is that they are way too early with promoting that automation solves everything, where it clearly does not in all cases (yet).

I'll quote Catherine Chen's words to summarize my position on the issue - she's a character from a SciFi novel, and for a space pilot, terribly skeptical of machines:

"Ultimately, algorithms are designed from a certain context, under certain assumptions. They work well within that context, but not outside, and in fact there may be quite unexpected behaviour if a few individually reasonable assumptions aren't met all at once. In fact, this is quite like I said earlier accidents occur."

"For example?", Sam asked. "Well, shuttle guidance algorithms for example assume you do not want to fly your shuttle underwater, or in a very dense atmosphere. They are designed primarily for an undamaged craft. And they assume things about your intention, like that you don't want to enter an atmosphere tail-first. They'd work well if any one of these points is not met --- the flight computer can control a damaged shuttle, but you've probably noticed during the strike missions that the AP becomes unstable in turbulence." Sam nodded, she remembered well. "Take a coincidence of two --- controlling a damaged craft in a dense, turbulent atmosphere, and you'll have a situation which the guidance system is bound to make mistakes, because nobody ever coded a detailed set of rules to handle this particular situation --- and the rules coded to handle a damaged craft may easily not mesh well with the rules coded to handle a dense and turbulent atmosphere."

"Do you mean to say...", Samantha said, as she slowly realized. Cath nodded: "Yes --- I believe no flight computer could have saved the shuttle in the situation. Yet you did. Because you do not need to have all decision rules pre-coded --- you can adapt and improvise. See --- that's perhaps my more abstract reason not to trust machines. I'm a human being, I learn things through pain and mistakes --- but I can learn. The more I leave my decisions to algorithms, the more this ability is taken from me. If a virtual reality shields me from seeing anything unpleasant, how am I supposed to learn from it? If a decision algorithm prevents me from making mistakes in simple situations --- how am I supposed to learn to make sound decisions and deal with mistakes in complex situations? Of course humans make their own mistakes just the same --- but we're better at managing them than machines. Whereas we can't usually manage algorithmic mistakes --- especially when we're used to trust computers to produce the right answer." She paused, clearly hesitating, and then added very quietly: "And, there's also a very personal reason. Ultimately, I feel that making my decisions myself and accepting responsibility for them is part of the essentials that make me human. I feel that letting any machine decide for me would take away from that even if that machine would make consistently better decisions."

- Thorsten

- Posts: 12490

- Joined: Mon Nov 02, 2009 9:33 am

Re: Airbus madness

Hello Thorsten,

There is an interesting philosophical question raised in that text. "Is it inherently impossible to have 100% automation?". I am inclined to believe the argument that algorithms are designed from a certain context. But i really do no not know what new computers, etc... the future will bring. For now i just look at "Where are we now?". And that is certainly not 100% automation.

The only thing i differ in is that i do not have a personal thing against computers. In the areas where they work correct i am happy to let them do the dirty work. That does not make me feel dehumanized. I am rather lazy

Kind regards, Vincent

There is an interesting philosophical question raised in that text. "Is it inherently impossible to have 100% automation?". I am inclined to believe the argument that algorithms are designed from a certain context. But i really do no not know what new computers, etc... the future will bring. For now i just look at "Where are we now?". And that is certainly not 100% automation.

The only thing i differ in is that i do not have a personal thing against computers. In the areas where they work correct i am happy to let them do the dirty work. That does not make me feel dehumanized. I am rather lazy

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

Some posts was split off to the new topic Artificial intelligence and emergent behavior

Low-level flying — It's all fun and games till someone looses an engine. (Paraphrased from a YouTube video)

Improving the Dassault Mirage F1 (Wiki, Forum, GitLab. Work in slow progress)

Some YouTube videos

Improving the Dassault Mirage F1 (Wiki, Forum, GitLab. Work in slow progress)

Some YouTube videos

- Johan G

- Moderator

- Posts: 6634

- Joined: Fri Aug 06, 2010 6:33 pm

- Location: Sweden

- Callsign: SE-JG

- IRC name: Johan_G

- Version: 2020.3.4

- OS: Windows 10, 64 bit

Re: Airbus madness

Please don't send support requests by PM, instead post your questions on the forum so that all users can contribute and benefit

Thanks & all the best,

Hooray

Help write next month's newsletter !

pui2canvas | MapStructure | Canvas Development | Programming resources

Thanks & all the best,

Hooray

Help write next month's newsletter !

pui2canvas | MapStructure | Canvas Development | Programming resources

- Hooray

- Posts: 12707

- Joined: Tue Mar 25, 2008 9:40 am

- Pronouns: THOU

Re: Airbus madness

Nice system. Had expected that a few years earlier. Most likely it has a rule: On uncaught exception: stop. One can hardly do that with something like an aircraft, can one?

Kind regards, Vincent

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

Yeah I think trains are like, one dimensional compare to airplane... forward, backwards, stop... lol

sure, in between there are things like, how fast, there's a corner, nearing station, etc.. but otherwise I can imagine it's simple as ABC relative to airplanes..

sure, in between there are things like, how fast, there's a corner, nearing station, etc.. but otherwise I can imagine it's simple as ABC relative to airplanes..

-

HJ1AN - Posts: 374

- Joined: Sat Jul 25, 2009 5:45 am

- Callsign: HJ888

- Version: 3.4

- OS: OS X

Re: Airbus madness

Just spent the afternoon reading this report.

http://www.bea.aero/docspa/2013/sx-s130 ... 329.en.pdf

The thing that strikes the investigators and me is that the pilots raced carefree to the glide slope, and did not recognize that a tailwind might just slightly influence their ability to slow down and be fully configured in time during glide slope.

The investigators name some standard probable causes, like fatigue, inadequate sops and training. But i see a deeper aspect to this. Namely inadequate learning by flying too often on autopilot. Not sure if it applies exactly to this specific case, but generally it does surely.

If one (almost) always flies on autopilot, one not only loses basic flying skills, but also understanding of basic flight dynamics, like energy management on glide slope. Resulting in asking the autopilot to do unrealistic performances.

Let me explain: A pilot that always does glide slope on automation, never notices that the autopilot is struggling to get rid of the speed with tailwind, because of the higher rate of descent. He looses the idea that tailwind may be factor for something, until the day that the tailwind is a bit more effective and the autopilot can not slow down fast enough. Then he takes *reactive* action like pulling the spoilers out.

Now let's see what a pilot learns that regularly does full glide slope manually with tailwind. He struggles with getting rid of the speed, so he learns that tailwind is something to worry about. Any time he hears there is tailwind, he will take *proactive* action, and make sure he starts the glide slope at low speed.

Kind regards, Vincent

http://www.bea.aero/docspa/2013/sx-s130 ... 329.en.pdf

The thing that strikes the investigators and me is that the pilots raced carefree to the glide slope, and did not recognize that a tailwind might just slightly influence their ability to slow down and be fully configured in time during glide slope.

The investigators name some standard probable causes, like fatigue, inadequate sops and training. But i see a deeper aspect to this. Namely inadequate learning by flying too often on autopilot. Not sure if it applies exactly to this specific case, but generally it does surely.

If one (almost) always flies on autopilot, one not only loses basic flying skills, but also understanding of basic flight dynamics, like energy management on glide slope. Resulting in asking the autopilot to do unrealistic performances.

Let me explain: A pilot that always does glide slope on automation, never notices that the autopilot is struggling to get rid of the speed with tailwind, because of the higher rate of descent. He looses the idea that tailwind may be factor for something, until the day that the tailwind is a bit more effective and the autopilot can not slow down fast enough. Then he takes *reactive* action like pulling the spoilers out.

Now let's see what a pilot learns that regularly does full glide slope manually with tailwind. He struggles with getting rid of the speed, so he learns that tailwind is something to worry about. Any time he hears there is tailwind, he will take *proactive* action, and make sure he starts the glide slope at low speed.

Kind regards, Vincent

- KL-666

- Posts: 781

- Joined: Sat Jan 19, 2013 2:32 pm

Re: Airbus madness

I agree with that assessment.

It's funny to see how instructive coding is in that respect. The Space Shuttle FDM has gone from simple controls with a few simple helpers to complex controls based on rate controllers and completely automatic pitch schedule.

Now, trying to do it like an airplane with 'stick controls airfoil', you would immediately notice what the trim of the vehicle was - just based on how much you had to pull on the stick to keep the nose at required AoA. And likewise, control saturation due to fully deflected elevons and the need to relieve load (speedbrake pitching moment) was obvious.

With the rate controller, you can just not care - the FCS takes care of trim automatically, right to the point where it can't - then it fails catastrophically.

On the other hand, using rate controllers has resulted in the precision that's actually necessary for successful ranging, and it allows you to focus on ranging rather than keeping the vehicle alive, so there's something to be said for it as well. But the basic message is - yes, it is deceptively easy to mentally drop some factor which is usually compensated automatically.

It's funny to see how instructive coding is in that respect. The Space Shuttle FDM has gone from simple controls with a few simple helpers to complex controls based on rate controllers and completely automatic pitch schedule.

Now, trying to do it like an airplane with 'stick controls airfoil', you would immediately notice what the trim of the vehicle was - just based on how much you had to pull on the stick to keep the nose at required AoA. And likewise, control saturation due to fully deflected elevons and the need to relieve load (speedbrake pitching moment) was obvious.

With the rate controller, you can just not care - the FCS takes care of trim automatically, right to the point where it can't - then it fails catastrophically.

On the other hand, using rate controllers has resulted in the precision that's actually necessary for successful ranging, and it allows you to focus on ranging rather than keeping the vehicle alive, so there's something to be said for it as well. But the basic message is - yes, it is deceptively easy to mentally drop some factor which is usually compensated automatically.

- Thorsten

- Posts: 12490

- Joined: Mon Nov 02, 2009 9:33 am

Re: Airbus madness

The problem with all of this is not "automation" but the "unmitigated belief in automation". See, a logn time ago a pilot had to be able to fly a plane. Now, a pilot has to be able to use the autopiloat AND to recognize when a situation arises, that demands human intervention. The problem with the lack of training is probably more the second part. If they would have realized, they have to intervene, they would have probably been able to bring the Asiana flight down on the runway. The failure was not really automation, the failure was that the crew didn't recognize there was a situation not covered by automation.

- Jabberwocky

- Retired

- Posts: 1316

- Joined: Sat Mar 22, 2014 8:36 pm

- Callsign: JWOCKY

- Version: 3.0.0

- OS: Ubuntu 14.04

Who is online

Users browsing this forum: No registered users and 1 guest